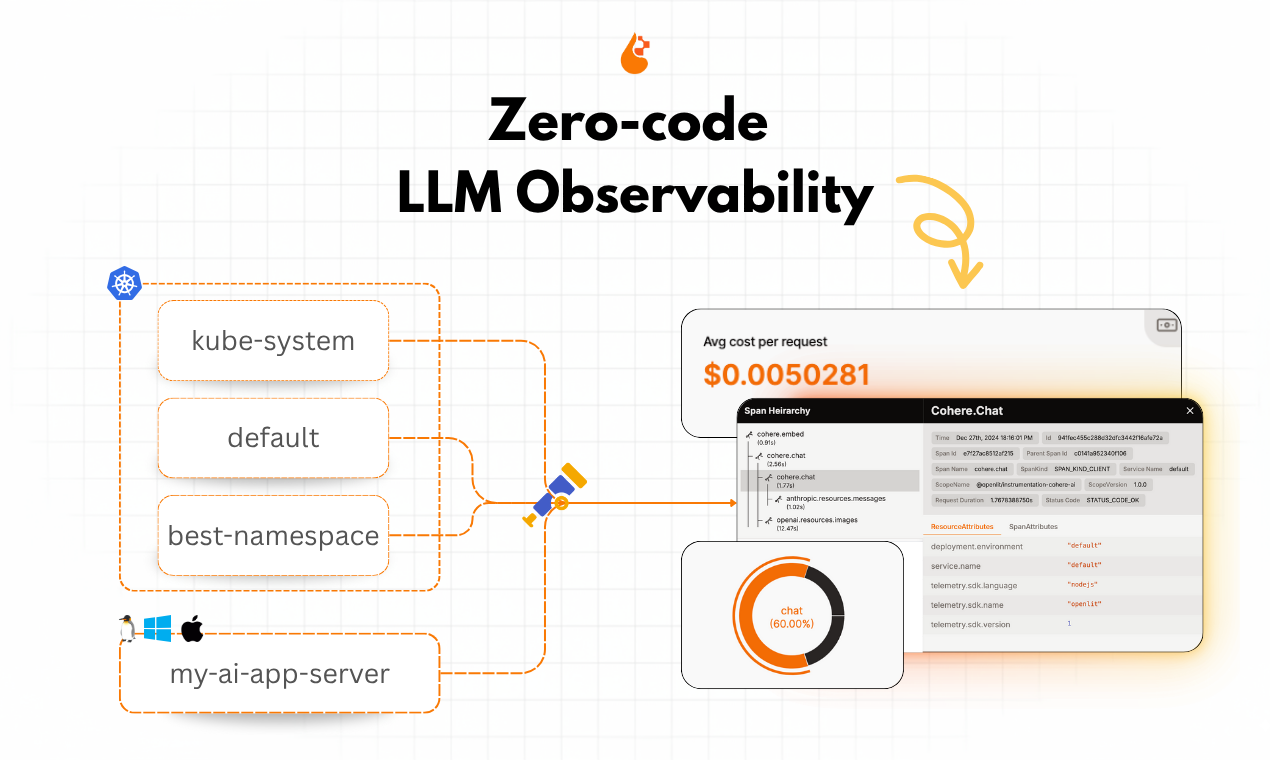

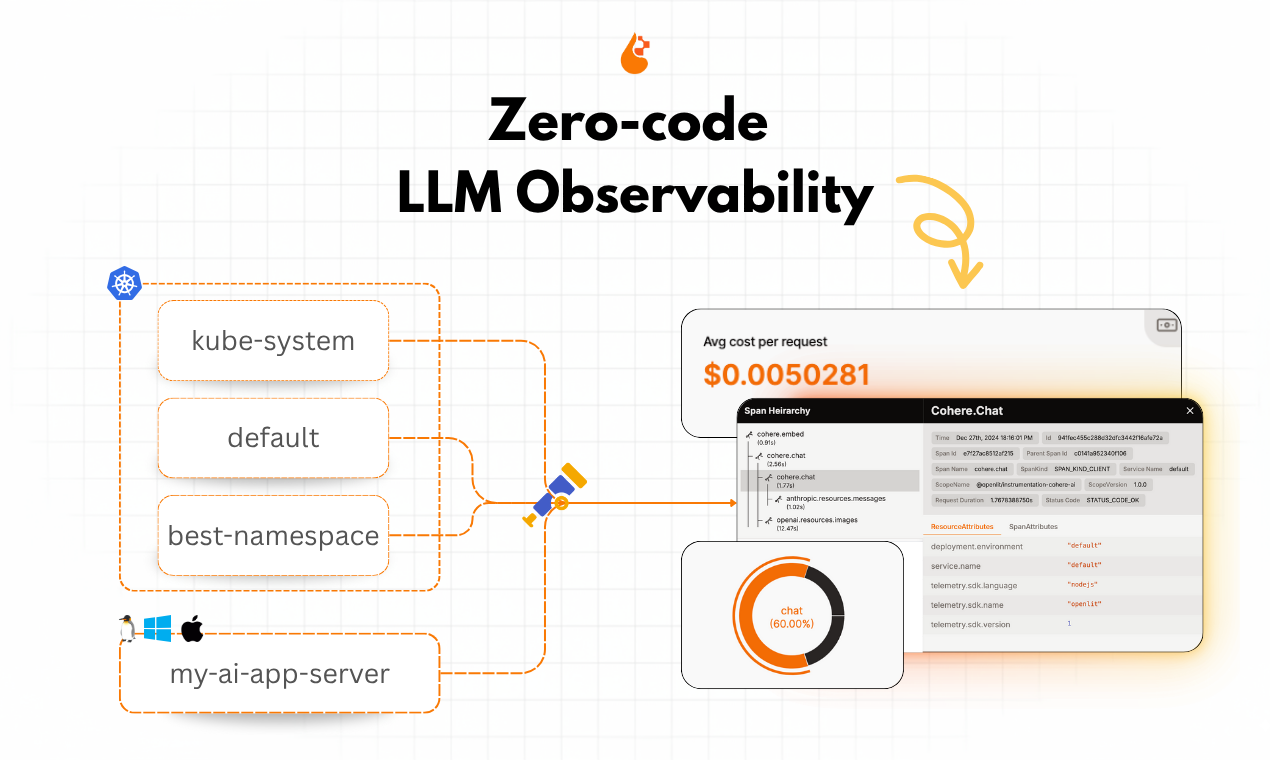

OpenLIT: Zero code Agent Observability

Continuous feedback for testing, tracing & fixing AI agents

OpenLIT is an open-source, OpenTelemetry-native observability and AI engineering platform that delivers zero-code instrumentation for LLMs, AI agents, vector databases, and GPU workflows. It provides comprehensive, out-of-the-box visibility through distributed traces and metrics, cost and token usage tracking, hallucination and response quality analysis, prompt and experiment versioning, and intuitive, fully customizable dashboards.

Features

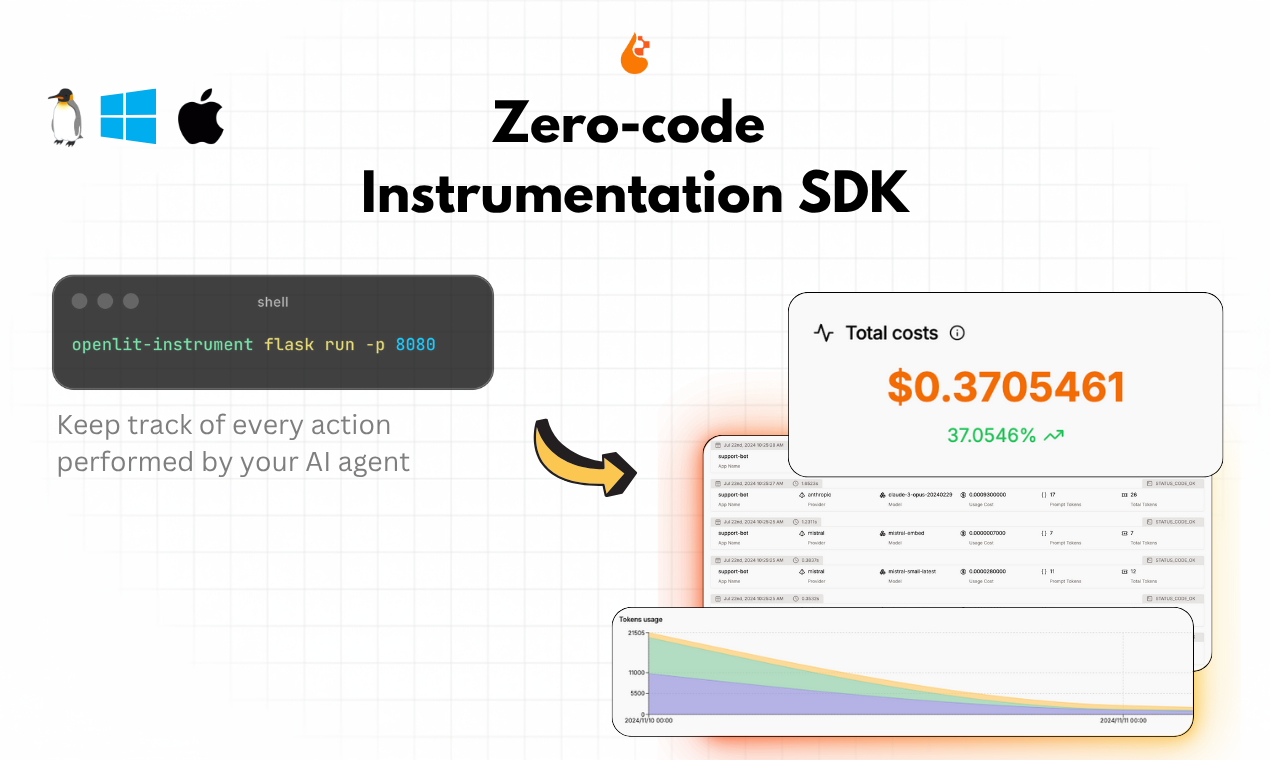

1. Zero-Code Instrumentation

- OpenLIT enables observability without needing any code changes.

- It automatically instruments LLMs, AI agents, vector databases, and GPUs without manual setup.

- This helps teams get instant visibility into their AI pipelines with minimal effort.

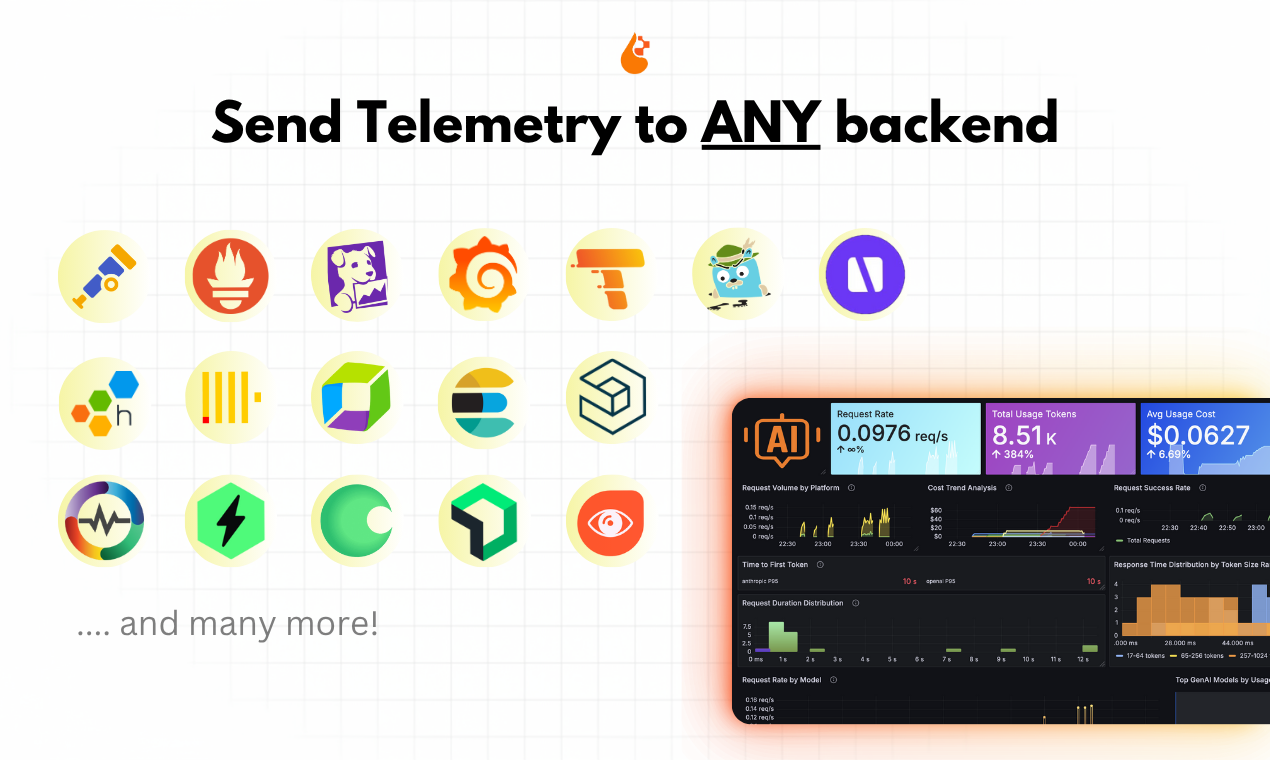

2. OpenTelemetry-Native

- Built fully on the OpenTelemetry standard, making it easy to integrate with existing monitoring tools like Grafana, Prometheus, Elastic, and New Relic.

- Ensures consistency and compatibility across various observability platforms.

3. End-to-End Tracing

- Provides detailed traces for every AI request, including model calls, retrieval steps, and tool usage in agent workflows.

- Helps identify slow or failing components and optimize performance.

4. Metrics and Cost Tracking

- Tracks latency, throughput, token usage, and costs per model, request, or environment.

- Enables detailed cost analysis and optimization for multi-model or multi-provider setups.

5. Hallucination and Quality Detection

- Detects and flags potential hallucinations or low-quality responses.

- Offers simple evaluation metrics to assess response reliability.

6. Prompt Management and Experiment Tracking

- Stores and versions prompts for consistent experimentation.

- Allows for the comparison of model outputs, helping teams refine prompts and maintain quality control.

7. Self-Hosted and Privacy-Focused

- Being open-source, OpenLIT can be fully self-hosted.

- Keeps all observability data within your infrastructure, ensuring security and compliance.

8. Dashboards and Visualization

- Includes ready-to-use dashboards for latency, cost, token usage, GPU performance, and error tracking.

Use Cases

1. Debugging AI Applications

- Trace entire LLM and agent workflows to pinpoint the exact cause of poor or incorrect responses.

- Identify whether errors originate from the model, the retrieval step, or the external tool.

2. Cost Optimization

- Monitor token usage and costs across models and environments.

- Compare different LLMs to find the best balance between accuracy, latency, and price.

3. Performance Monitoring

- Observe response times and throughput to ensure stable and efficient AI services.

- Track GPU usage for locally hosted or fine-tuned models to optimize hardware utilization.

4. Regression Testing and Version Control

- Track prompt versions and compare new model responses with previous ones.

- Detect performance regressions before deployment.

5. Monitoring AI Agents and Tools

- Understand how often agents invoke different tools and how each tool impacts latency and reliability.

- Spot slow or error-prone tools quickly.

6. Compliance and Security

- Use the self-hosted setup to ensure all telemetry data remains within your secure infrastructure.

- Ideal for industries with strict data protection requirements, such as finance or healthcare.

7. Setting AI Service-Level Objectives (SLOs)

- Define measurable goals such as latency limits, cost per request, or acceptable hallucination rates.

- Integrate alerts into existing observability systems for proactive monitoring.

8. Onboarding and Knowledge Sharing

- Use OpenLIT traces and dashboards as learning tools for new engineers.

- Helps them understand real AI workflows and system interactions without needing deep system access.

Comments

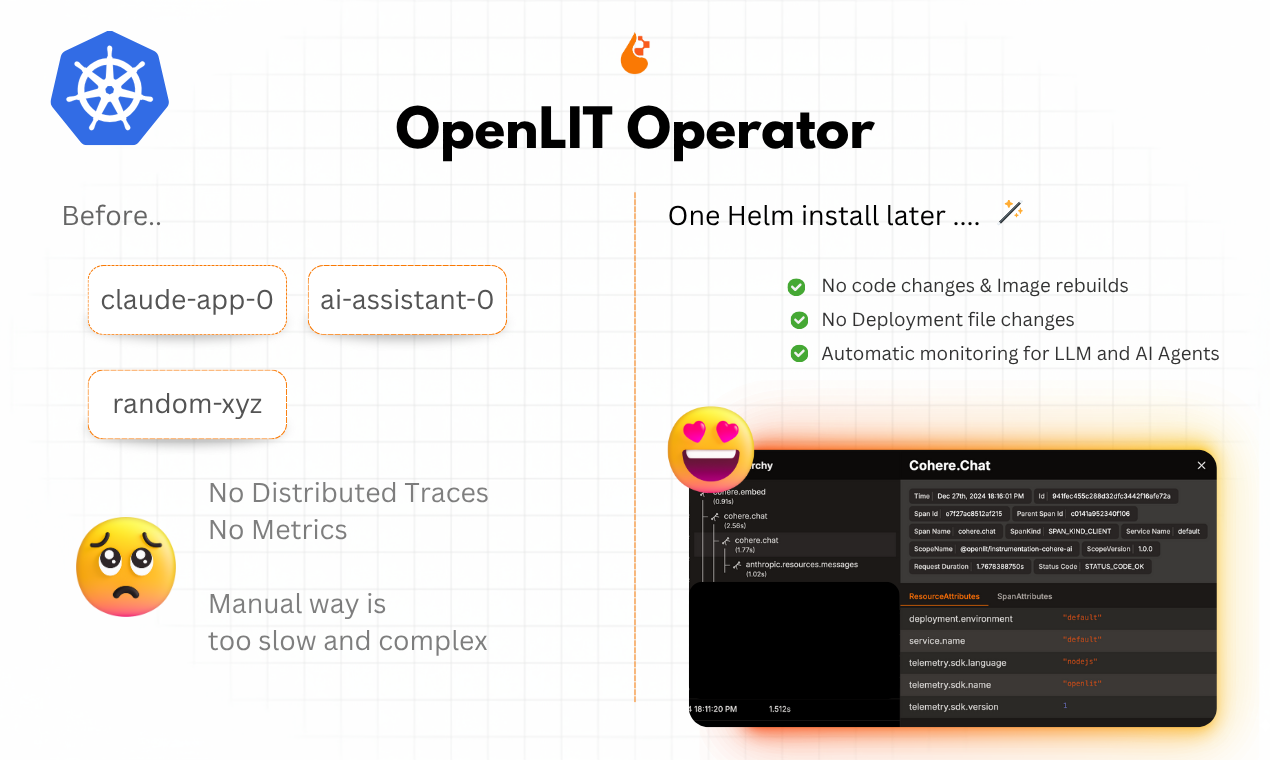

Hey Fazier! 👋👋👋 I'm Patcher, founder and maintainer of OpenLIT. After speaking with over 50 engineering teams in the past year, we consistently heard the same frustration: "We want to monitor our LLMs and Agents, but changing code and redeploying would slow down our launch." Every team told us the same story: even though most LLM monitoring tools only require a few lines of integration code, the deployment overhead kills momentum. They'd spend days testing changes, rebuilding Docker images, updating deployment files, and coordinating deployments to get basic LLM monitoring. At scale, it's worse: imagine modifying and redeploying 10+ AI services individually. That's why we built OpenLIT with true zero-code observability: no code changes, no image rebuilds, no deployment file changes. Two paths, same result - choose what fits your setup: ☸️ Kubernetes teams: helm install openlit-operator + restart your pods. Done. 💻 Everyone else: openlit-instrument python your_app.py on Linux, Windows, or Mac. That's it. We also learned teams have strong opinions about their observability stack, so while we use OpenLIT instrumentations by default, you can bring your own (OpenLLMetry, OpenInference, custom setups), and we just handle the zero-code injection part. The best part? It works with whatever you're already using - OpenAI, Anthropic, LangChain, CrewAI, custom agents. No special SDKs or vendor lock-in. See for yourself: ⭐ GitHub: https://github.com/openlit/openlit 📚 Docs: https://docs.openlit.io/latest/operator/overview 🚀 Quick Start: https://docs.openlit.io/latest/operator/quickstart We're excited to launch OpenLIT's Zero-code LLM Observability capabilities on Product Hunt today. We'll be in the comments all day and can't wait to hear your thoughts & feedback! 👇

Premium Products

Sponsors

BuyAwards

View allAwards

View allMakers

Makers

Comments

Hey Fazier! 👋👋👋 I'm Patcher, founder and maintainer of OpenLIT. After speaking with over 50 engineering teams in the past year, we consistently heard the same frustration: "We want to monitor our LLMs and Agents, but changing code and redeploying would slow down our launch." Every team told us the same story: even though most LLM monitoring tools only require a few lines of integration code, the deployment overhead kills momentum. They'd spend days testing changes, rebuilding Docker images, updating deployment files, and coordinating deployments to get basic LLM monitoring. At scale, it's worse: imagine modifying and redeploying 10+ AI services individually. That's why we built OpenLIT with true zero-code observability: no code changes, no image rebuilds, no deployment file changes. Two paths, same result - choose what fits your setup: ☸️ Kubernetes teams: helm install openlit-operator + restart your pods. Done. 💻 Everyone else: openlit-instrument python your_app.py on Linux, Windows, or Mac. That's it. We also learned teams have strong opinions about their observability stack, so while we use OpenLIT instrumentations by default, you can bring your own (OpenLLMetry, OpenInference, custom setups), and we just handle the zero-code injection part. The best part? It works with whatever you're already using - OpenAI, Anthropic, LangChain, CrewAI, custom agents. No special SDKs or vendor lock-in. See for yourself: ⭐ GitHub: https://github.com/openlit/openlit 📚 Docs: https://docs.openlit.io/latest/operator/overview 🚀 Quick Start: https://docs.openlit.io/latest/operator/quickstart We're excited to launch OpenLIT's Zero-code LLM Observability capabilities on Product Hunt today. We'll be in the comments all day and can't wait to hear your thoughts & feedback! 👇

Premium Products

New to Fazier?

Find your next favorite product or submit your own. Made by @FalakDigital.

Copyright ©2025. All Rights Reserved