OpenLIT 2.0

Open Source Platform for AI Engineering

OpenLIT is an open-source product that helps developers build and manage AI apps in production, effectively improving their accuracy. As a self-hosted solution, developers can experiment with LLMs, manage and version prompts, securely manage API keys, and provide safeguards against prompt injection and jailbreak attempts. It also includes built-in OpenTelemetry-native observability and evaluation for the complete GenAI stack (LLMs, vector databases, and GPUs).

Features

- 📈 Analytics Dashboard: Monitor your AI application’s health and performance with detailed dashboards that track metrics, costs, and user interactions, providing a clear view of overall efficiency.

- 🔌 OpenTelemetry-native Observability SDKs: Vendor-neutral SDKs to send traces and metrics to your existing observability tools.

- 💲 Cost Tracking for Custom and Fine-Tuned Models: Tailor cost estimations for specific models using custom pricing files for precise budgeting.

- 🔒 Guardrails: Implement security measures and input validation to protect against malicious injection attacks.

- 🧪 Evaluations: Assess LLM responses to ensure accuracy and relevance.

- 🔑 API Keys and Secrets Management: Securely handle your API keys and secrets centrally, avoiding insecure practices.

- 🐛 Exceptions Monitoring Dashboard: Quickly spot and resolve issues by tracking common exceptions and errors with a dedicated monitoring dashboard.

- 💭 Prompt Management: Manage and version prompts using Prompt Hub for consistent and easy access across applications.

- 🔑 API Keys and Secrets Management: Securely handle your API keys and secrets centrally, avoiding insecure practices.

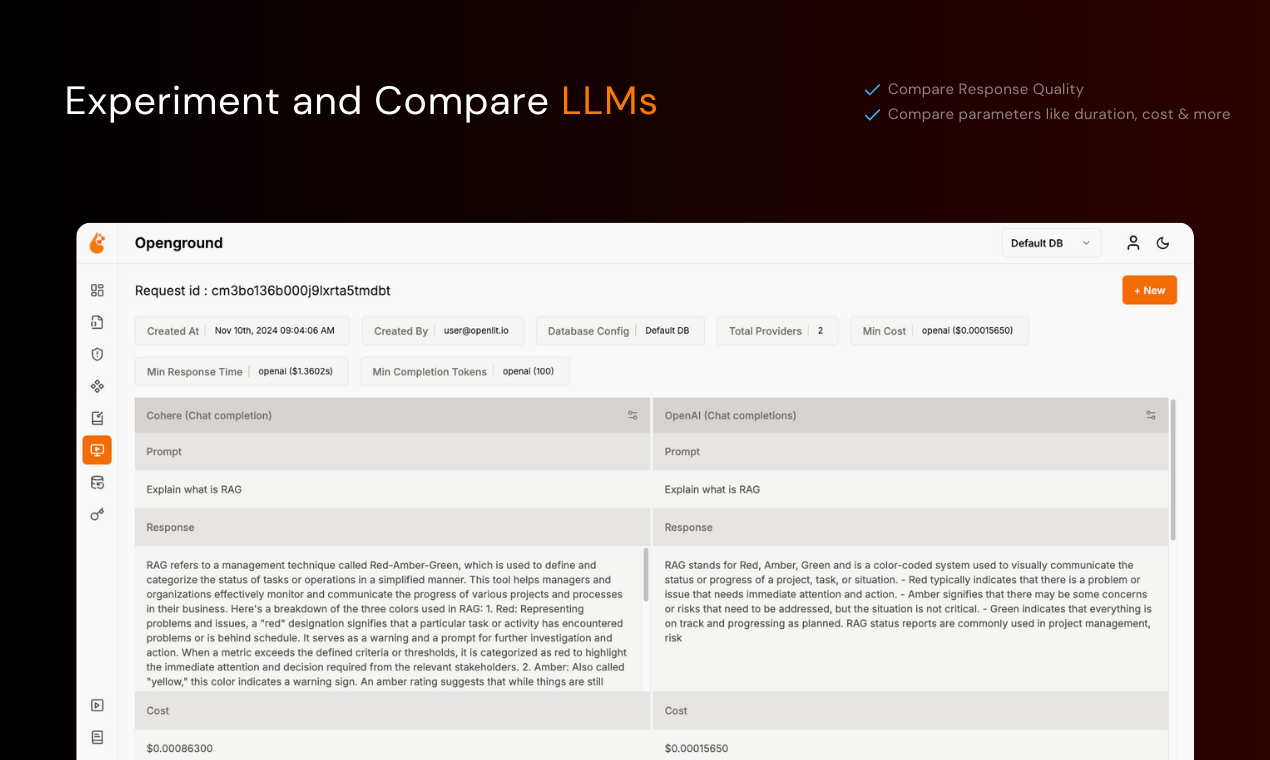

- 🎮 Experiment with different LLMs: Use OpenGround to explore, test, and compare various LLMs side by side.

Use Cases

- Real-time Application Monitoring: Use the Analytics Dashboard to monitor performance metrics and exceptions in real-time, allowing developers to quickly identify and resolve performance issues or bugs in AI applications.

- Secure Development Practices: Implement Guardrails and centralized API Keys and Secrets Management to ensure secure code and configuration practices, reducing the risk of vulnerabilities such as injection attacks.

- Cost-effective Model Training: Utilize Cost Tracking for Custom Models to develop cost-efficient AI models by accurately estimating training expenses, helping teams stay within budget constraints.

- Continuous Improvement of AI Outputs: Incorporate Evaluations to consistently assess LLM outputs, enabling developers to iteratively refine model responses for accuracy and relevance in user applications.

- Prototype and Test AI Solutions: Use OpenGround to rapidly prototype, test, and compare different large language models, helping developers find the best fit for their specific application needs through experimentation.

Comments

OpenLIT is a powerful, self-hosted solution for building and managing AI apps in production. With features like prompt versioning, API key security, and safeguards against injection attacks, it ensures reliability. Its OpenTelemetry-native observability for LLMs, vector databases, and GPUs makes it a standout tool for optimizing AI performance and accuracy.

Hello Fazier community!!! I'm Patcher, the maintainer of OpenLIT, and I'm thrilled to announce our second launch—OpenLIT 2.0! 🚀 With this version, we're enhancing our open-source, self-hosted AI Engineering and analytics platform to make it even more powerful and effortless to integrate. We understand the challenges of evolving an LLM MVP into a robust product—high inference costs, debugging hurdles, security issues, and performance tuning can be hard AF. OpenLIT is designed to provide essential insights and ease this journey for all of us developers. Here's what's new in OpenLIT 2.0: - ⚡ OpenTelemetry-native Tracing and Metrics - 🔌 Vendor-neutral SDK for flexible data routing - 🔍 Enhanced Visual Analytical and Debugging Tools - 💭 Streamlined Prompt Management and Versioning - 👨👩👧👦 Comprehensive User Interaction Tracking - 🕹️ Interactive Model Playground - 🧪 LLM Response Quality Evaluations As always, OpenLIT remains fully open-source (Apache 2) and self-hosted, ensuring your data stays private and secure in your environment while seamlessly integrating with over 30 GenAI tools in just one line of code. Check out our Quickstart Guide (https://docs.openlit.io/latest/quickstart-observability) to see how OpenLIT 2.0 can streamline your AI development process. If you're on board with our mission and vision, we'd love your support with a ⭐ star on GitHub (https://github.com/openlit/openlit). I'm here to chat and eager to hear your thoughts on how we can continue improving OpenLIT for you! 😊

Premium Products

Sponsors

BuyMakers

Makers

Comments

OpenLIT is a powerful, self-hosted solution for building and managing AI apps in production. With features like prompt versioning, API key security, and safeguards against injection attacks, it ensures reliability. Its OpenTelemetry-native observability for LLMs, vector databases, and GPUs makes it a standout tool for optimizing AI performance and accuracy.

Hello Fazier community!!! I'm Patcher, the maintainer of OpenLIT, and I'm thrilled to announce our second launch—OpenLIT 2.0! 🚀 With this version, we're enhancing our open-source, self-hosted AI Engineering and analytics platform to make it even more powerful and effortless to integrate. We understand the challenges of evolving an LLM MVP into a robust product—high inference costs, debugging hurdles, security issues, and performance tuning can be hard AF. OpenLIT is designed to provide essential insights and ease this journey for all of us developers. Here's what's new in OpenLIT 2.0: - ⚡ OpenTelemetry-native Tracing and Metrics - 🔌 Vendor-neutral SDK for flexible data routing - 🔍 Enhanced Visual Analytical and Debugging Tools - 💭 Streamlined Prompt Management and Versioning - 👨👩👧👦 Comprehensive User Interaction Tracking - 🕹️ Interactive Model Playground - 🧪 LLM Response Quality Evaluations As always, OpenLIT remains fully open-source (Apache 2) and self-hosted, ensuring your data stays private and secure in your environment while seamlessly integrating with over 30 GenAI tools in just one line of code. Check out our Quickstart Guide (https://docs.openlit.io/latest/quickstart-observability) to see how OpenLIT 2.0 can streamline your AI development process. If you're on board with our mission and vision, we'd love your support with a ⭐ star on GitHub (https://github.com/openlit/openlit). I'm here to chat and eager to hear your thoughts on how we can continue improving OpenLIT for you! 😊

Premium Products

New to Fazier?

Find your next favorite product or submit your own. Made by @FalakDigital.

Copyright ©2025. All Rights Reserved